Installation Overview

Classic nodes

x86 nodes

These nodes are classical machines with high end processors, 64 cores dual socket, and 1TB of DDRAM4 memory. You can expand information checking cat /proc/pcuinfo or their slurm definition with scontrol show node c7-<number>.

$ cat /proc/cpuinfo

...

processor : 63

vendor_id : GenuineIntel

cpu family : 6

model : 106

model name : Intel(R) Xeon(R) Gold 6338 CPU @ 2.00GHz

stepping : 6

microcode : 0xd000375

cpu MHz : 2000.000

cache size : 49152 KB

physical id : 1

siblings : 32

core id : 31

cpu cores : 32

apicid : 190

initial apicid : 190

fpu : yes

fpu_exception : yes

cpuid level : 27

wp : yes

flags : fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush dts acpi mmx fxsr sse sse2 ss ht tm pbe syscall nx pdpe1gb rdtscp lm constant_tsc art arch_perfmon pebs bts rep_good nopl xtopology nonstop_tsc cpuid aperfmperf pni pclmulqdq dtes64 monitor ds_cpl smx est tm2 ssse3 sdbg fma cx16 xtpr pdcm pcid dca sse4_1 sse4_2 x2apic movbe popcnt tsc_deadline_timer aes xsave avx f16c rdrand lahf_lm abm 3dnowprefetch cpuid_fault epb cat_l3 invpcid_single intel_ppin ssbd mba ibrs ibpb stibp ibrs_enhanced fsgsbase tsc_adjust bmi1 avx2 smep bmi2 erms invpcid cqm rdt_a avx512f avx512dq rdseed adx smap avx512ifma clflushopt clwb intel_pt avx512cd sha_ni avx512bw avx512vl xsaveopt xsavec xgetbv1 xsaves cqm_llc cqm_occup_llc cqm_mbm_total cqm_mbm_local split_lock_detect wbnoinvd dtherm ida arat pln pts hwp hwp_act_window hwp_epp hwp_pkg_req avx512vbmi umip pku ospke avx512_vbmi2 gfni vaes vpclmulqdq avx512_vnni avx512_bitalg tme avx512_vpopcntdq la57 rdpid fsrm md_clear pconfig flush_l1d arch_capabilities

bugs : spectre_v1 spectre_v2 spec_store_bypass swapgs mmio_stale_data eibrs_pbrsb

bogomips : 4022.42

clflush size : 64

cache_alignment : 64

address sizes : 46 bits physical, 57 bits virtual

power management:

a64 nodes

Here we have classical nodes with arm processors crafted to achieve high efficiency in quantum emulation jobs. They have one A64FX processor per node with sve and 32GB of HMB2 memory. Each processor is subdivided into 4 core memory groups of 12 cores each. Using cat /proc/pcuinfo or checking their slurm definition with scontrol show node c7-101 is a good way to obtain extensive information.

$ cat /proc/cpuinfo

...

processor : 47

BogoMIPS : 200.00

Features : fp asimd evtstrm sha1 sha2 crc32 atomics fphp asimdhp cpuid asimdrdm fcma dcpop sve

CPU implementer : 0x46

CPU architecture: 8

CPU variant : 0x1

CPU part : 0x001

CPU revision : 0

Frontal node

It has the same node characteristic as any other x86 node.

The purpose of this node is to act as a classical machine closely attached to quantum hardware. With this we aim to optimize circuit I/O to the Quantum Computer. We perform the task with the use of a client-server approach using ZMQ. The dependency is almost transparent to the user, and it’s handled by our python module qmio.

Quantum node

Quantum Computer

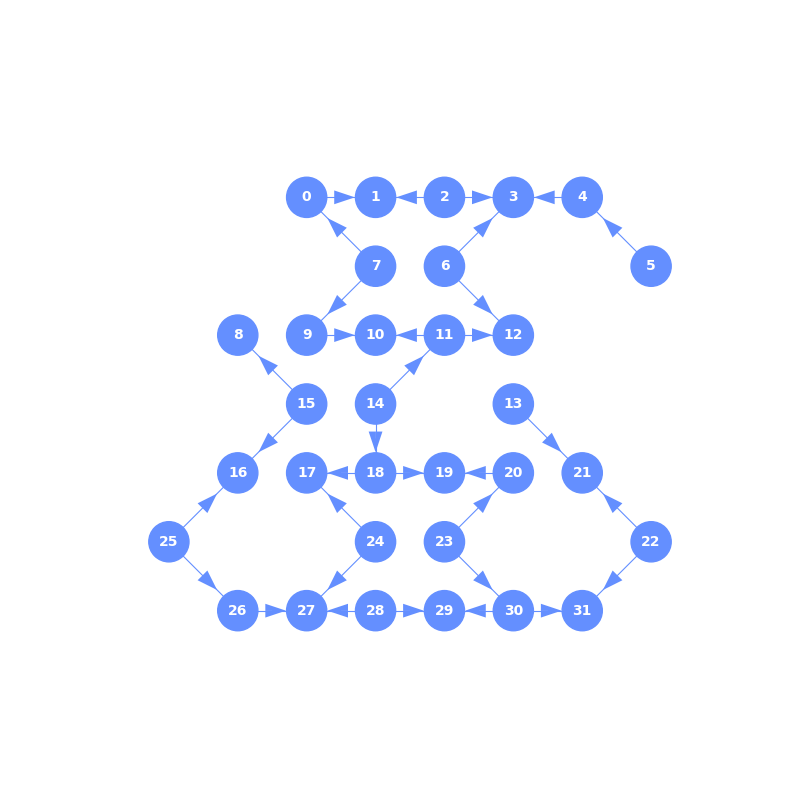

Oxford Quantum Circuit’s superconducting qpu. The QPU consist of 32 coaxmom qubits. It is handled by microwave pulses crafted by QAT software.

Topology:

To be Confirmed

Warning

Qubit ID’s in the image are a logical remapping. Use this ID’s whith OpenQASM files. If you use OpenPulse gramar, you’ll need physical ID’s. They are just a remapping so they follow the same order.

Logical ID’s: from 0-31

Physica ID’s: from 1-35. 1, 10 and 16 missing

Storage

As a user, you will find some different storage options with different capabilities. You can access them using environment variables defined below. Form more information on HOME or STORE look into <<STORAGE.url>>. TBD

$HOME: Same as any other CESGA systems

$STORE: Same as any other CESGA systems

$LUSTRE: Specific to this system

Check quotas with the myquota command.

$ myquota

- HOME and Store filesystems:

--------------------------

Filesystem space quota limit files quota limit

/mnt/Q_SWAP 798M 100G 101G 25337 100k 101k

/mnt/netapp2/Home_FT2 6220M 10005M 10240M 79067 100k 101k

- LUSTRE filesystem:

-----------------

Filesystem used quota limit grace files quota limit grace

/lustre 4k 1T 1.172T - 1 200000 240000 -

Network infrastructure

You’ll have infiniband connection over every classical node in the system.

Integration

The Quantum Computer is tightly attached to the cluster using the same network infrastructure. The computer comes with a classical control server that acts as a main door to the variety of quantum control instruments needed to communicate analogly with the qpu.

Our implementation connects to this quantum control server in a client-server pair through our frontal node. This frontal node is one of the ilk partition modified to serve this purpouse.

As mentioned before, we use a client-server approach to communicate both nodes as fast as possible to reduce communication times and to optimize circuit I/O with the quantum computer. With this technic we have optimized hybrid routines that requires a lot of classical optimizations interleaved with qauntum processing.

Scheme TBD

Sumary

node |

cores |

mem |

IB |

|---|---|---|---|

a64 |

48 |

32GB |

yes |

x86 |

64 |

1TB |

yes |

Frontal |

64 |

1TB |

yes |

QPU |

32 qubits |

— |

No |